Building an Agent? You Need an Environment

The Gap in AI Agent Development

Agents live in messy systems with unpredictable users, interconnected tools, and cascading consequences. Unlike traditional software where inputs and outputs are fully controlled, an agent’s flexible I/O and its reasoning capability are both its superpower and its failure mode.

If you want to build autonomous, high-value, and action-based agents, agents beyond chatbots with read-only access, you need an environment where they can practice, fail safely, and improve before they deploy to production. This post explains why and how simulation environments become an essential part of the stack for agent development. This is why we built Veris AI.

What Exactly Is an Environment?

An environment is your agent’s universe: everything it can perceive, interact with, and influence. In production, this includes:

- Real users with distinct styles and expectations

- Tools and services the agent can access

- Tasks and scenarios that the agent encounters day to day

A compact way to model this is a partially observable Markov decision process (POMDP)

$$E = (S, A, O, T, \Omega, R).$$

Here, $S$ is the set of world states, $A$ the actions, $O$ the possible observations, $T(s’ \mid s, a)$ the probability of moving to state $s’$ after taking action $a$ in state $s$, $\Omega(o \mid s’, a)$ the probability of observing $o$ after arriving in $s’$, and $R(s, a)$ the immediate reward. For intuition, think of a foggy-maze video game: you never see the whole map, only glimpses $o \in O$; you pick a move $a \in A$; the game updates the hidden state $s’ \sim T(\cdot \mid s, a)$; you receive a new glimpse $o \sim \Omega(\cdot \mid s’, a)$; and you score points $R(s, a)$.

You do not need to lean on heavy math to use this framing. It is enough to remember that agents choose actions under uncertainty, and what matters is the sequence of state changes they cause.

The Production Environment Paradox

Production is the actual environment for the agent, and in theory, it is perfect to test and train on it: deploying directly to production and learning from live behavior is the most effective way for agents to learn. You would swap models, run experiments, refine prompts and tools, and even run online reinforcement learning against real feedback.

Obviously, in most cases you cannot do that. The blockers are concrete:

- Safety and compliance risks with PII and policy violations

- Customer tolerance for failure is low, especially for multi-step workflows

- Incident budgets, rate limits, and change windows

Users are not guinea pigs for agent training. If the agent is the product, you cannot sell “be patient while it learns.”

Is Traditional Evals the Solution for this Paradox?

Static evals grade answers, not outcomes. Usually this comes in the form of a Golden Dataset with labeled tasks. Static evals fail to measure multi-turn dynamics and recovery from errors, side effects of tool use, state accumulation over time or edge cases in sequential decision making. Static evals are built for a world with simple input/outputs: an ML model predicting the click-through rate from historical features, or on LLM call assigning an intent category to a message.

Agents, however, change their environments across steps. In the end, what you want to know is whether the final state, including the state of all tools, is correct or not.

Example. A customer asks to cancel an order and get a refund.

- The agent apologizes and says a refund is issued. A static eval would score this as correct.

- In reality, the agent must check shipment status, restock inventory if appropriate, issue a partial refund if a coupon was involved, update CRM, and send a confirmation email with the correct policy language.

- What matters is whether the final conditions are correct across tools, not just the text output.

Enter Simulation Environments

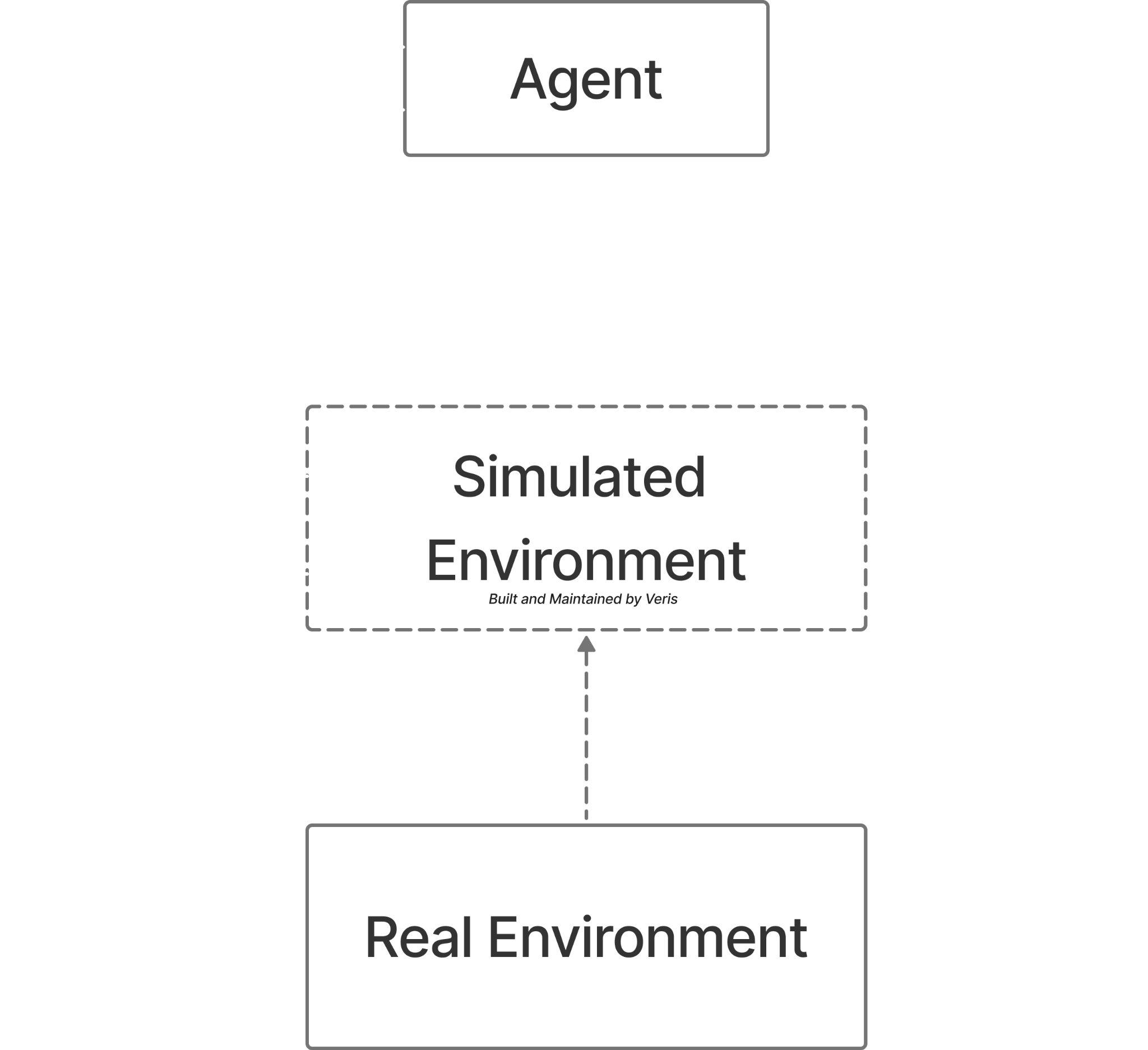

A simulation environment is a safe parallel world where agents can act, break things, and learn without harming customers or assets (e.g. databases). When building a high-fidelity simulation environment, the goal is to make simulated behavior predictive of production behavior.

To train high-quality agents in this simulated environment, the sim-to-real gap, $\Delta$, the difference between real and simulated outcomes on metrics we care about should be minimized:

- Task success gap: $$\left| \hat{p}_{\text{sim}} - \hat{p}_{\text{real}} \right|$$

- Tool fidelity: similarity of API error and latency distributions

- User interaction fidelity: similarity of both the user types (characteristics and behaviour) and their communication with the agent (language, tone, and interaction style)

- Scenario distribution and coverage: how similar are the tasks that the agent sees in production vs simulation

- Post-condition distance: diff between expected and observed world states

We do not need perfect fidelity. We need the right fidelity for the objectives we are optimizing.

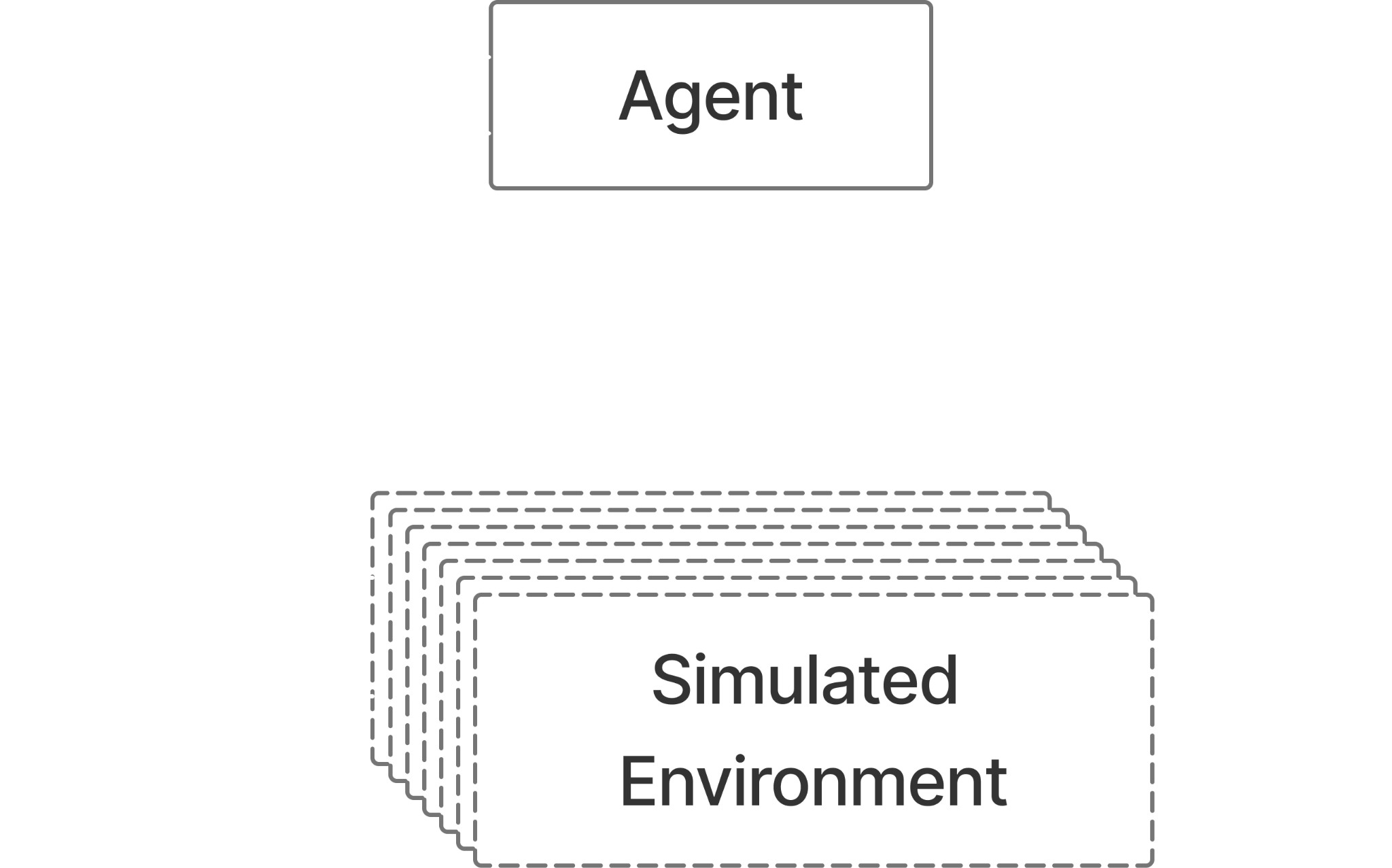

Another important benefit of having a simulation environment is that you can infinitely scale and parallelize to collect lots of datapoints and conduct many experiments. You don’t need to wait to collect real user data to start optimizing your agent.

Anatomy of a Simulation Environment

A simulation environment is essentially a controlled sandbox – think a Docker container containing:

- State Management System

- Initial state snapshots: The world as it exists when your agent encounters a problem

- State transitions: How the world changes based on agent actions

- Simulated assets: Mocked tools, synthetic users, and their evolving states

- Task Scenarios

- Problem descriptions your agent must solve

- Contextual information about the environment

- Success criteria and constraints

- Reward/Evaluation System

- Verification functions that check if tasks are completed correctly

- Intermediate reward signals for multi-step processes

- Can use LLM judges, unit tests, or custom rubrics

The Training-in-Simulation Advantage

Training and testing in simulation gives you:

- Safe failure and fast iteration

- Reproducibility but also controlled variation

- Edge-case coverage on demand

- Systematic regression and A/B testing of models, prompts, and tools

Veris AI: Environment as a Service

Building and maintaining high-value environments is more work than building the agent itself. As agent functionality grows, you must mock sophisticated tools, simulate realistic users, maintain state consistency, track drift from production, and balance fidelity with cost and speed.

That is what we are building at Veris AI. You can offload all those complexities to us and focus on building the best agentic product. Veris provides:

- High-fidelity mock version of all agent tools: Prebuilt simulations for common services and an SDK to faithfully mock your unique tools.

- High-fidelity user simulation. Realistic users as well as behaviors and communication patterns.

- Advanced state management. Robust tracking so simulations stay consistent and replayable.

- Custom rubric execution. Run evaluation criteria on full trajectories.

- Optimizer-agnostic workflows. Use RL, supervised fine-tuning, automatic prompt optimization, or hybrid approaches.

Teams use Veris AI to cut iteration time, and reduce deployment risk without turning users into test subjects.

The Future of Agent Development

As agents take on higher-stakes tasks, the gap between “works in demo” and “works in production” widens. Simulation environments are the only way to trust your agent. They are the infrastructure that lets you measure, improve, and ship responsibly.

If you’re shipping an agent, you need an environment to train it in. You may build it in house or leave it to Veris AI and instead focus on building your product.

To get in touch, access technical documentation, deployment guides, or to schedule a platform demonstration, contact hello@veris.ai or visit https://veris.ai.